LOL – first day of school for the kids today.

Obviously, I slept in. Planned to leave by 8:20 but woke up at 7:30. We’re out of bread. And my son was going to make a sandwich for lunch. That’s ok, I have time to run to the store and back.

Head out to the car. It’s not unlocking with the button, which is kind of odd. Open it up with the key. The light selector is turned to running lights… oh boy. I forgot to check the knobs after taking the toddler out of the front seat yesterday. I tried to turn the car on. Nothin.

I searched through two of our emergency kits. I have two sets of jumper cables, odd brands of band-aids, flashlights, batteries, foil blankets, cigarette lighter air compressors… but nothing to help me jump my car.

Not a problem, there’s a dude chillin in his truck two houses down. I walked over, he seemed annoyed but drove over to help me jump my car. I open the hood. I can’t find the battery, neither of us can. Where in the heck is the battery on this transit?!

Oh… it’s underneath the drivers seat. You have to literally take the drivers seat out to reach the battery. I apologize to my neighbor for wasting his time. It’s 7:58.

I figure I’d search the internet for how to jump a Ford Transit. Oh – there are specific jumper terminals in the main engine compartment. I found them. Turns out our transit is missing the very noticeable red jumper cable label that points directly to the terminal on most other Transits. It must’ve broken off. Had it been there my neighbor and I (first time I met him by the way) would’ve found it.

Great I found the terminals! But, my neighbor is gone…

I called my brother-in-law, he lives a few minutes away. Asked if he could come jump my car. He could. It would take him a bit to head over. My son probably isn’t going to get his sandwich.

Brother-in-law is here, I glance at the clock. It’s 8:15. Alright, I hope the car jumps. It totally does. Whew! I thank my brother-in-law profusely. He takes off, it’s 8:19. The kids get in the car. We’re out of there by 8:20am and I get the kids dropped off at school on time. My son doesn’t have a sandwich.

Yes, our kids are homeschooled. But for highschool they have a brand new co-op they are going to. They get 1 day of in class lessons from the teacher a week, and the rest of the week is homeschooled.

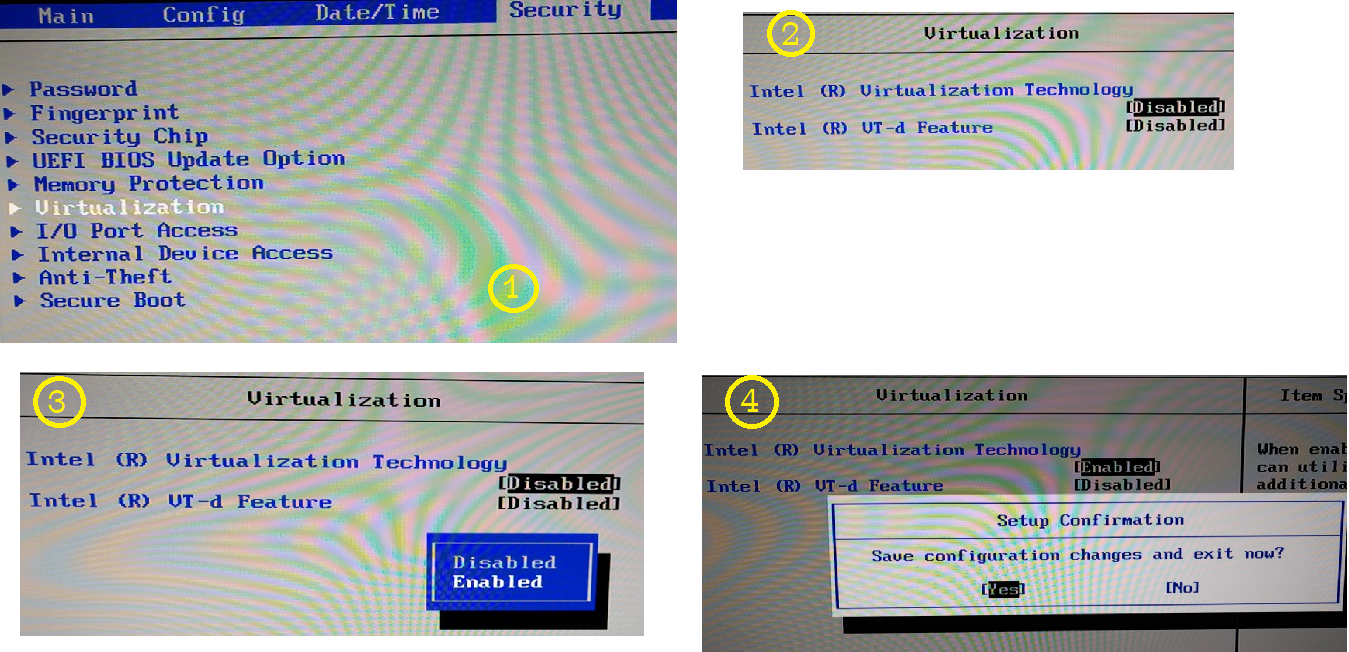

If only I would’ve “jumped” on a post from my boss 6 years ago 🤣

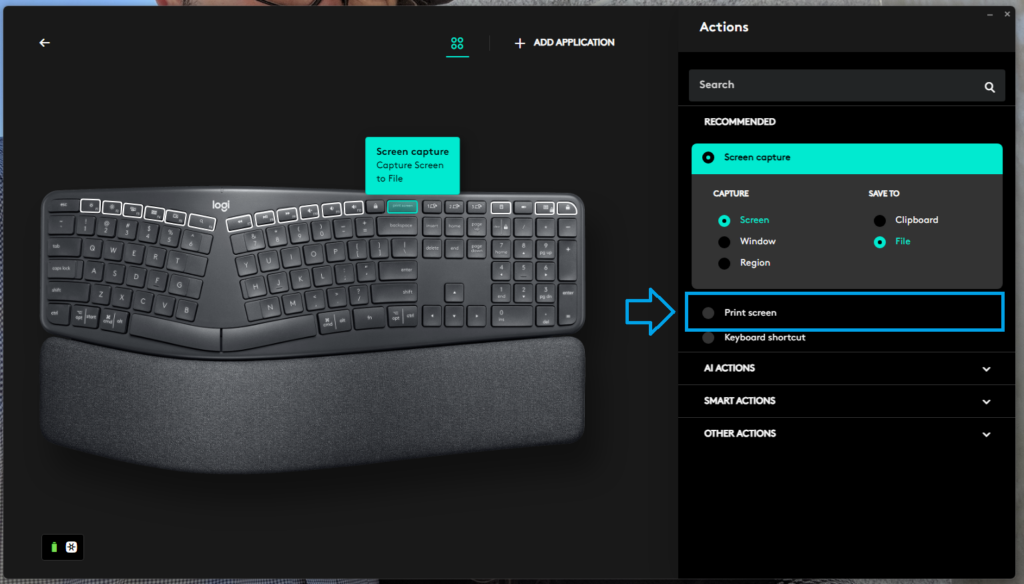

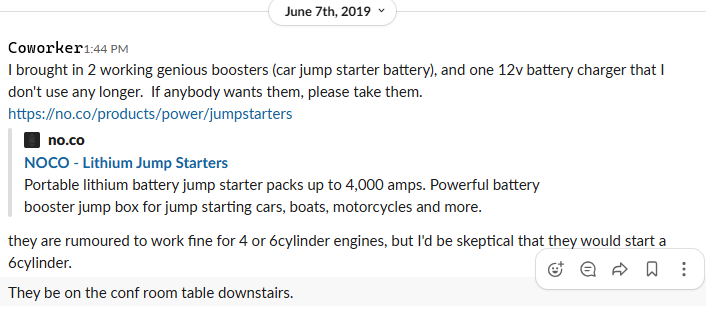

Anyways, after this whole situation I bought one of these Wolfbox things. Gonna be totally prepared next time. You never know when your toddler will leave your car lights on.

https://amzn.to/3JW5tYe 👈 (this is totally an affiliate link. 😆)

💡Heads up: I may earn a small commission if you buy through this link – at no extra cost to you!